Computers can do more and more things remarkably well.1 The days that humans can beat computers at chess are long gone and recently Go has suffered the same fate.2 3 With advancements in machine learning, computers are even getting better at less well defined tasks like image and voice recognition. But there are still tons of tasks that humans can do much better: cooking, programming, having a conversation, etc. So far, no computer comes close to passing the Turing test.

World chess champion Garry Kasparov was defeated by chess computer

Deep Blue in 1997. Photo by Owen Williams.4

World chess champion Garry Kasparov was defeated by chess computer

Deep Blue in 1997. Photo by Owen Williams.4

But in this post I want to look at a different kind of problems. Problems that are very well defined, such that they have a specific input and every specific input has an objectively correct answer. And from those we will consider only the problems that have a yes-no answer, these are what we call decision problems in computer science.5

Decision problems that take long to solve

In complexity theory a problem is called hard when it takes long on our computers to solve large instances of the problem. So then the longer it takes on large instances, the harder the problem would be.

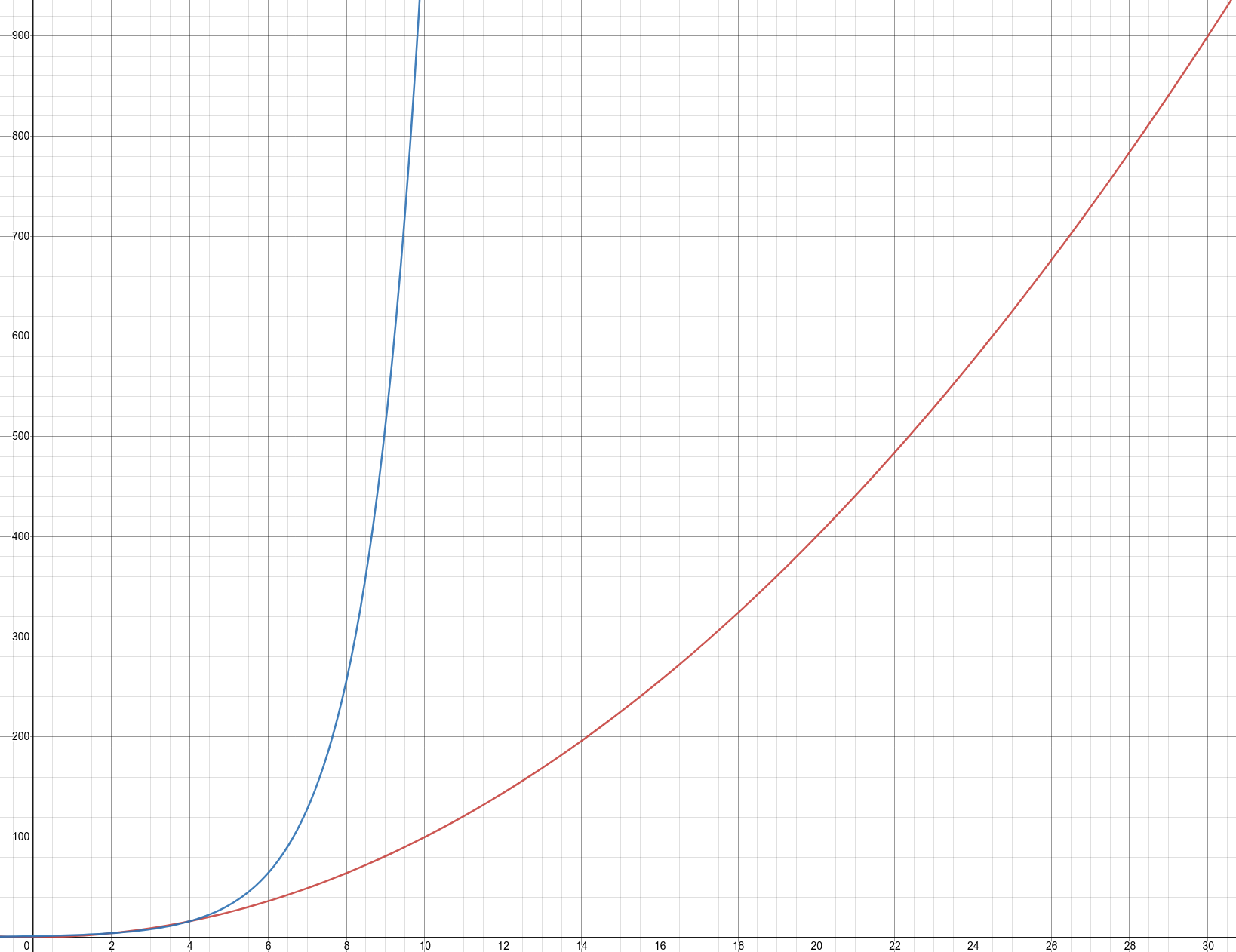

In the graph you can see that 2^x (blue) grows much quicker than x^2 (red) for large values.

If you have some computer science background, you might know about NP-hardness. If a problem is NP-hard, then there is no algorithm that can solve it in polynomial time if P≠NP.6 For example SAT, the problem of deciding if a given propositional formula is satisfiable, is NP-hard.

Such a problem that has no polynomial time algorithm is clearly harder than a problem in P, since it takes longer for our computers to solve that problem. And there is a whole wealth of complexity classes with problems that have different time and space needs for large input sizes. But all these problems can still be solved if we have enough time and space and we can do better than that. Let’s instead look at some unsolvable problems!

The halting problem

The halting problem is the problem of deciding whether a given computer program will eventually terminate. Some computer programs always terminate (examples in Python):

print("Hello world!")

So in the halting problem, when we are given this program, the correct answer is yes. But other programs will never terminate:

while True:

print("I'm stuck")

This program will never exit its loop, so the correct answer is no. This might seem pretty straight forward, so why is this problem undecidable? When Turing proved this in 1936, computer science was just getting started, so he had to device a whole model for computation to proof this. However, the idea is suprisingly straight forward.

Suppose that the halting problem is decidable, then we should be able

to make a function halts that takes a function and returns whether

it halts in finite time. But then we can construct the following

program:

def g():

if halts(g):

while True:

print("I'm stuck")

Now consider g. If halts(g) returns True, then g never halts,

which is in contradiction with the fact that halts is our halting

function. If halts(g) returns False, then g does halt, but again

this is in contradiction with the fact that halts is our halting

function. So a halting function simply can’t exist and so the halting

problem is not decidable.7

Alan Turing showed that the halting problem was undecidable in 1936

And it turns out there are many more problems that are undecidable, just like the halting problem.8 Some interesting examples are the Entscheidungsproblem and solving Diophantine equations. But are all unsolvable problems of the same caliber? Or are some harder then others?

Turing degrees

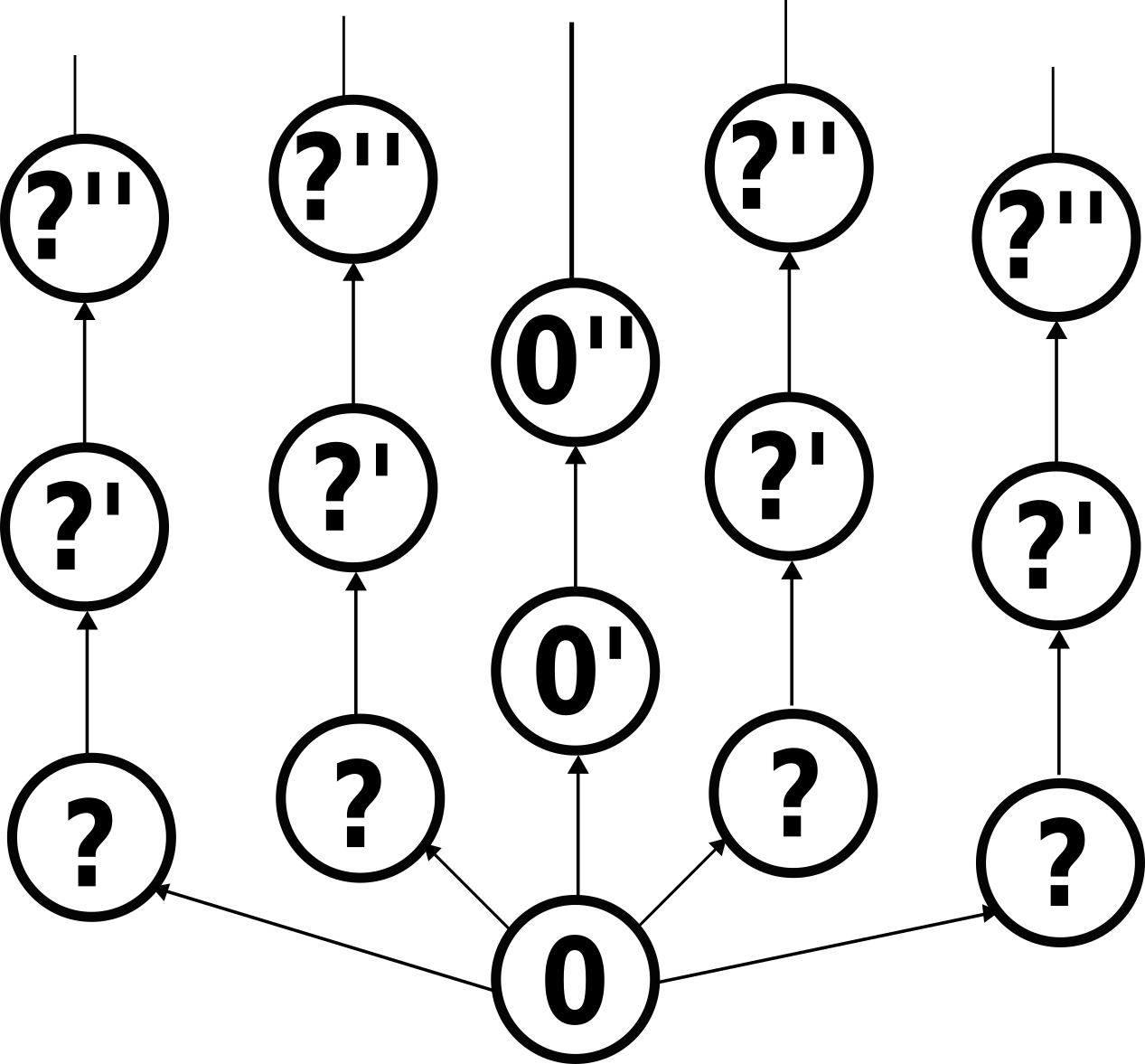

To answer this question, we are forced to further define what we mean with hard. Luckily, now we enter the realm of computability theory and here we have a whole hierarchy of just how unsolvable a problem is. We call this level of unsolvability the Turing degree of the problem and the higher it is, the harder the problem.9 10

Rough sketch of the partial order of Turing degrees, with infinitely many Turing degrees missing (also between the different degrees).

The Turing degrees are defined through the concept of Turing reducibility. A problem X is Turing reducible to a problem Y if we can write a program that solves problem X if we get answers to problem Y for free.11 Two problems have the same Turing degree if they are Turing reducible to each other and one Turing degree a is lower than a Turing degree b if all problems with Turing degree a are Turing reducible to a problem with Turing degree b. This means that if we can solve a problem with Turing degree n then we can solve all problems with Turing degree n or lower. So the higher the Turing degree, the harder the problem!

Obviously all computable problems are Turing reducable to all other problems, because we can already write a program to solve them, even if we don’t get the answers to any other problem for free. Because of this all the computable problems are in the lowest Turing degree, which we call 0. The halting problem has a Turing degree which we call 0’, which is strictly higher than 0, because we can’t solve the halting problem when we only get the answer to a computable problem.

Harder problems

So with our new definition of hardness, when we ask for a harder

problem than the halting problem, we simply want to find a problem

that we can’t solve even if we magically did have this halts

function. So lets imagine we import some library that gives us this

halts function, for any Python function we pass to halts it will

tell us in finite time whether that function halts.12 13 Then

what are the problems we still can’t solve?

We can use a similar argument as Turing did for the halting problem

and define the halting^2 problem as deciding whether a given

Python function that can use the halts function halts. Let’s

assume that a function exists that solves this and we can define it

using only ordinary Python and the halts function, we’ll call it

halts2. Now we define the function h as:

from oracles import halts

def h():

if halts2(h):

while True:

print("I'm stuck")

But now we have the same paradox as before! So halts2 can’t be

defined only using Python and halts. Which means that according to

our definition of hardness, the halting^2 problem is harder than the

halting problem. And we can keep doing this, defining halting^3,

halting^4, etc. Each of them will be strictly harder than the

previous.

So we run into the same problem that we get when someone asks us what the largest natural number is. There is no largest number, because for every natural number we are given, we can give a higher one. Similarly, there is no hardest problem, for any given hard problem, we can give a harder one.16 Still, this allows us to give some very hard problems, like halting^(10^10), halting^(googolplex) or halting^(BusyBeaver(googolplex)).17

Other Turing degrees

This halting trick doesn’t even give us all possible hard problems. It actually turns out that there are infinitely many other problems that are not Turing reducible to any of these ordinary halting problems and to which none of the halting problems are reducible.18 And all these problems have halting problems of their own, defined by the halting of computers that have access to their solutions, that are strictly harder. And then you have the infinitely many halting problems of these halting problems of these incomparable problems, each leading to very hard problems that are still incomparable with our ’normal’ halting problems through Turing reducibility.

The definition of hardness we use can’t say which of these problems is harder. Clearly they are different, but since neither is Turing reducible to the other, neither is harder than the other. But that makes sense, I don’t think there is any real way to compare the hardness of such problems.

Conclusion

The landscape of uncomputable decision problems is dauntingly huge. We have seen that there are much harder problems than just the halting problem and that there is no hardest problem. Next time you struggle to come up with the answer to a hard question you can console yourself with the idea that it could be much worse. They could have asked you to the decide the halting^1000 problem and we’ve seen how truly hard that is.

-

The cover photo was made by Matt Howard and used under the CC BY-SA 2.0 from Commons. ↩︎

-

No human can beat the best chess computers like Stockfish anymore. The world chess champion Kasparov was already defeated in a match by the chess computer Deep Blue in 1997, see https://en.wikipedia.org/wiki/Deep_Blue_versus_Garry_Kasparov. ↩︎

-

With the creation of AlphaGo the best Go players can’t beat computers anymore either. See https://en.wikipedia.org/wiki/AlphaGo_versus_Ke_Jie. ↩︎

-

From https://commons.wikimedia.org/wiki/File:Kasparov-30.jpg. Used under the CC BY-SA 3.0. ↩︎

-

Solving the problem in polynomial time here means that the time the algorithm takes on a problem of a certain size is bounded by a polynomial function on that size. If this does not exist, then the time it takes grows very fast and the algorithm usually gets practically impossible to compute in any reasonable time. We usually assume that P≠NP and say that problems in P are easier than NP-hard problems, but this has not been proven. For a brief introduction to complexity theory, you can check out this PDF ↩︎

-

This sketch of the proof is based on the proof concept given on Wikipedia, which is based on a proof given by Christopher Strachey in C. Strachey, An impossible program, The Computer Journal, Volume 7, Issue 4, January 1965, Page 313, https://doi.org/10.1093/comjnl/7.4.313 ↩︎

-

In fact, there are infinitely many, but we’ll get to that. ↩︎

-

More accurately, the Turing degrees are classes of problems of the same unsolvability. So the Turing degrees contain problems, more than that the problems simply have Turing degrees. But that distinction is not really relevant for our purposes. ↩︎

-

Also, instead of problems we should talk about subsets of the set of all natural numbers. But these correspond to our decision problems, we can imagine every number representing a certain input to our problem and the inclusion in the subset to represent whether the answer is ‘yes’ or ’no’. ↩︎

-

With ‘get the answer to Y for free’ I mean that our program can use a function that always gives the answer to problem instances of Y in finite time. The real definition of Turing reducibility is a bit more technical, it involves Turing machines instead of computers and oracles that give the free answers. ↩︎

-

Of course, the Python functions we pass to

haltscan’t use thehaltsfunction, ashaltsis not something that can be expressed in Python. Ourhaltsfunction only needs to be able to say whether normal Python functions halt, so it side steps the paradox that Turing used to proof that the halting problem is unsolvable on normal computers. Here we still assume the the halting problem is unsolvable, we just imagine there is some other machine that ‘magically’ gives us the right answers. ↩︎ -

Interestingly, a computer that can send messages back in time to itself could act as such a

haltsfunction. See this paper: Aaronson, S., Bavarian, M., & Gueltrini, G. (2016). Computability theory of closed timelike curves. arXiv preprint arXiv:1609.05507. ↩︎ -

We call a machine that ‘magically’ computes the answer to a hard problem an oracle. So the halts oracle would somehow give us the answer to the halting problem, and this would be used by our postulated

halts2function. ↩︎ -

It is a widely known fact that we can import almost anything in Python, sadly no one made a library that gives us the

haltsfunction yet. See this relevant xkcd ↩︎ -

We have only looked at this for halting problems, but it is actually the case for all problems by taking the Turing jump from its Turing degree. ↩︎

-

The busy beaver function is especially cool, because it is itself uncomputable. Only the first few values are known because it grows so fast. The googolplex value of the busy beaver function is really really really unimaginably large. ↩︎

-

This is a consequence of the fact that every Turing degree except 0 has another incomparable Turing degree and that every Turing degree has infinitely many problems in it, see here. ↩︎